Good, actionable, and timely data is at the heart of an organization’s digital transformation journey. With data pouring in from multiple sources, in different formats and across multiple environments, traditional ETL methods for data integration can no longer cope with the growing volume, variety and velocity of data. Data virtualization (DV) can help by acting as a bridge between disparate data sources, unifying them to deliver a single source of truth for consumers.

How does data virtualization provide value for your organization?

Data virtualization entirely does away with the need to move or relocate the data from the source. It simply connects data consumers with data sources by way of an abstraction layer to enable fast data delivery across all channels.

In the not-so-distant past, businesses used to have physical data warehouses to store, process and use data for business analytics. However, data warehouses and the supporting architecture of data marts, data lakes (centralized repositories of structured and unstructured data), and integration processes are neither cost-effective nor agile enough to address today’s data needs. DV allows businesses to unify all data sources under a single logical data layer to provide real-time access and centralized security and governance without transformation or transportation of the said data. The consequent improvement in speed and visibility is so great that data virtualization has quickly become a critical data asset for businesses operating in hybrid or cloud-native environments.

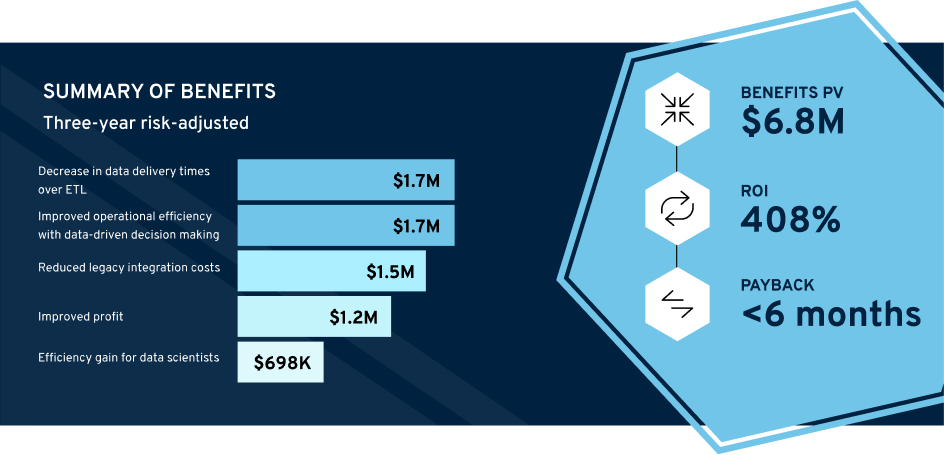

According to The Total Economic Impact™ Of Data Virtualization study commissioned by Denodo, data virtualization decreased data delivery time by at least 65% when compared to ETL processes. It has decreased time-to-revenue by an astounding 83%, with data scientists spending 67% less time on data modeling.

The business case for data virtualization

Figure: The tangible benefits of data virtualization

Data virtualization in action

Imagine a data lake with a ton of data waiting to be extracted, transformed into a target schema and then loaded into a system for BI (Business Intelligence) and analytics. The process is long, cumbersome and centralized (Denodo estimates DV decreases delivery times by 65% when compared to traditional ETL pipelines.) Since the data is in silos and access is not democratized, it takes a long time to run queries or reports. Depending on the volatility of the datasets, the result of a query may not even be valid by the time the data user receives it. This is even more of a problem with monolithic systems which lack flexibility when working with different data sources and schema, further limiting what an organization can achieve with its data.

Data virtualization approaches data integration from a unique angle by using a high-speed abstraction layer to cut down the data delivery time and enable self-serve analytics. A personalized DV architecture takes advantage of APIs to discover virtualized data and expose it to third parties. As a result, businesses can expose data at a much faster rate and reduce instances of latency, inconsistencies and duplicate data. Virtualized data opens the door to advanced use cases like machine learning, behavioral modeling, predictive analytics and more, positively affecting business areas of high criticality.

For most businesses, the three most significant impact areas of DV are:

1. Revenue opportunities:

Accelerates the integration of multiple data sources to provide businesses with the data needed to make data-driven financial decisions in a timely manner.

2. Cost savings:

ETL processes require the actual data to be physically moved and replicated, making it an expensive and time-consuming process. Data virtualization software combines middleware and abstraction to reduce the storage footprint, which helps reduce the cost of maintaining the data architecture.

3. Simplified risk management and governance:

Data security and governance mature over time systematically with the data virtualization program. By enforcing a singular point of access with a predefined logical model and using self-serve data catalogs, data owners can curb the need for continuous oversight.

Let’s look at a few business areas where data virtualization provides the greatest value.

How does data virtualization fit into your business objective?

Data virtualization delivers the speed necessary to analyze the data from IoT (Internet of Things) devices, 5G applications, SaaS (Software as a Service) solutions and edge devices. Its application extends across industries for both operational and analytical purposes.

1. Empower Agile BI with accelerated data delivery

Traditional BI depends on ETL processes to source and transform the data for consumption. It is slow, cost-intensive, and prone to security lapses and duplication. With data virtualization, businesses can abstract data sources from the consuming BI application with a logical data layer. This layer logically models the available data and exposes it to the data users and applications for consumption. It removes any complexity regarding the location, source, and structure of the data to deliver fresh data and stick on top of data initiatives.

Irrespective of which BI tools are in use, reporting and analytics require an underlying logic to combine data tables. Data virtualization uses a logical data fabric approach to aggregate the data based on business rules and expose it to reporting solutions. Therefore, businesses can significantly accelerate data delivery and consequently react to changes faster.

2. Unlock alternate revenue streams with data services

In our experience with data integration, data as a service is a great opportunity to monetize the wealth of information available to your enterprise. Data virtualization can expose real-time data from multiple sources to consuming systems in the form of API calls. These data services can consolidate and reuse this multi-source data to provide additional value in the form of analytics or reports, either to internal users or external applications.

3. Extract the maximum value from your big data framework

Data virtualization seamlessly integrates with big data to combine analytics with traditional data warehouses, relational databases, data services, etc. and deliver advanced insights into operational processes at a fraction of the cost. It also allows the data users to access big data applications like Hadoop and Google Big Query without knowing how to navigate the underlying complexity of the data infrastructure.

4. Single view of truth

Organizations can use DV to aggregate information from multiple sources and isolate a single view of truth based on operational requirements. With self-serve analytics, data engineers can build single view applications that can answer a particular query or serve a singular business purpose. In hybrid or multi cloud environments, data flows in from multiple applications, generating thousands of data points spanning disparate interactions. Single view applications can provide unique insights into customer churn, pricing, marketing efficiency, product lifecycle management, etc.

5. Streamline and automate data governance and security processes

Data governance and security has become more challenging over the years, growing hand in hand with the amount of available data. It's not going to get much easier going forward, ultimately, it comes down to streamlining data management to a possible extent. Traditional ETL method depends on replication and physical transportation of data for integration, resulting in multiple data copies, which further complicate governance efforts. Similarly, different applications and distributed data systems will have differing access controls and permissions.

Data virtualization adopts a technology-agnostic approach to data security and governance; by using a logical layer to expose data, it masks the data’s structure, query language, source, and others. The logical data fabric reroutes data quality checks through a single point of access, taking a lot of pain out of data governance by directly linking objects in the governance policy to the data virtualization layer and centralizing the access points.

Next steps

Data virtualization unlocks the potential to play fast and loose with data and experiments beyond traditional applications. However, to get the most out of implementing a DV program, businesses need a dedicated data management team complete with data scientists, engineers, analysts, strategists and stewards with clearly defined roles and an unobstructed view of business goals.